Adaptive and Generative Music

Date : 2024-11-13 Entry ID : 16524 Entry type : comment

Adaptive music (also referred to as 'dynamic music') is game music that reacts to changes in gameplay state. It has been used in games since the 1970s, and encompasses a variety of techniques, from tempo and pitch changes, to re-sequencing of precomposed pieces, and dynamic mixing.

Generative music (also known as 'procedural' or 'algorithmic' music) is generated at run-time in software, either by generating individual notes, or by real-time synthesis. Generative methods can be used to make game music that is highly adaptive or even interactive, meaning generated directly from player input, but this is not always the case, as there are examples of non-adaptive generative music.

This article will provide an overview and history of techniques and analyze a games that employ various adaptive or generative music techniques.

Contents:

Adaptive Music

Adaptive music is game music that reacts to changes in gameplay state. It has been used in games since the 1970s, and encompasses a variety of techniques, from tempo and pitch changes, to re-sequencing of precomposed pieces, and dynamic mixing. We will investigate the history of adaptive music, and the most common techniques.

History of Adaptive Music in Arcade Games

The earliest examples of adaptive and generative music in video games were seen in arcade games from the 1970s, and were often based on pitch and tempo. Pitch and tempo were easy to modify, since the music of these games was note-based.

Featured interactive music based on movement direction.

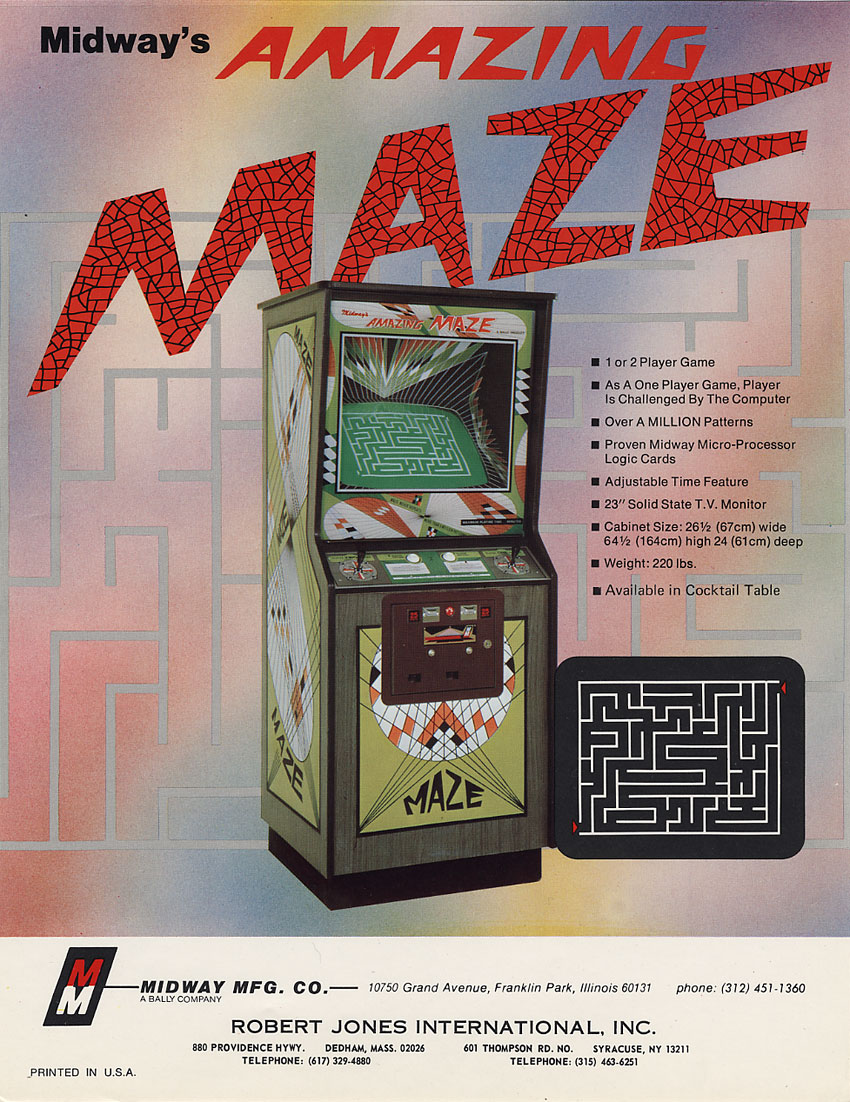

A very early example of music that changed based on player input is 1976 arcade game The Amazing Maze Game by Midway. In this game you had to work your way through a randomly generated maze, trying to outpace an AI-controlled player. When you move around the maze, the game would accompany you with rhythmic two-note patterns. The played notes were based on the direction you were holding the joystick, and stopped playing when you returned the joystick to neutral. This is an example of interactive music, meaning music that is directly controlled by player input. More on this later.

Tempo increased with level progress.

In Taito's classic shoot 'em up Space Invaders from 1978, you control a lone turret fixed to the bottom of the screen, and with a single shot at a time, you must defend Earth from descending rows of evil aliens. The game was a groundbreaking success, estimated to have grossed $4 billion in revenue in the first couple of years, equivalent to around $14 billion adjusted for inflation1. The success helped establish electronic arcade games as a successful business, and established the shoot 'em up genre.

The Space Invaders enemies are positioned in a grid, 5 rows of 11 aliens total. They march from side to side and slowly descend towards your turret. As you shoot each alien, the movement of the remaining enemies pick up speed, until the very last alien which moves very fast, making it difficult to hit with your single-shot cannon.23

Your shots are accompanied by 'pew pew' sounds and explosions with deep booms, but the advancing rows of aliens have a unique accompanyment in the form of a sequence of descending deep notes

C#1, B0, A0, G#0

The background music follows the accelerating gameplay by increasing the tempo of the repeating note sequence. Initially, the tempo is around 69 BPM, and at its fastest the notes play at 10 x the initial tempo, around 690 BPM. Thus, the music adapts closely to the difficulty of the gameplay and increases tension.4

Non-adaptive musical changes.

Carnival (Sega, 1980) plays the classic carnival theme song 'Sobre las Olas'. As you play a level, the song loops, and for every loop iteration, the notes transposes upwards, and the tempo increases slightly, building tension in a similar way to Space Invaders. However, this game doesn't have adaptive music, as the progression is the same for every play session, regardless of player action.

This game easily could have had adaptive music based on how many targets are hit by the player, but Sega's developers opted for the the music to progress at its own pace. It could be argued that this approach might feel more musically coherent than tempo and pitch changes in the middle of a musical loop, and the tension building will roughly correspond to a normal play session anyway.

We have now seen three examples of music in early arcade games, one that played music that directly reflected player input, referred to as interactive music, one that changed the tempo of music to correspond to gameplay tension ramping up, a type of adaptive music, and one that accompanies the play session with no regard for the actual gameplay state, which we can call non-adaptive, non-interactive music.

In the following, we will explore a fundamental type of adaptive music focused on skipping between sections of music based on gameplay.

Horizontal re-sequencing

Horizontal re-sequencing is when a game soundtrack is divided into segments that are jumped between based on the game state. 'Horizontal' refers to the musical timeline which is usually layed out horizontally in music software and musical notation.

Pinball

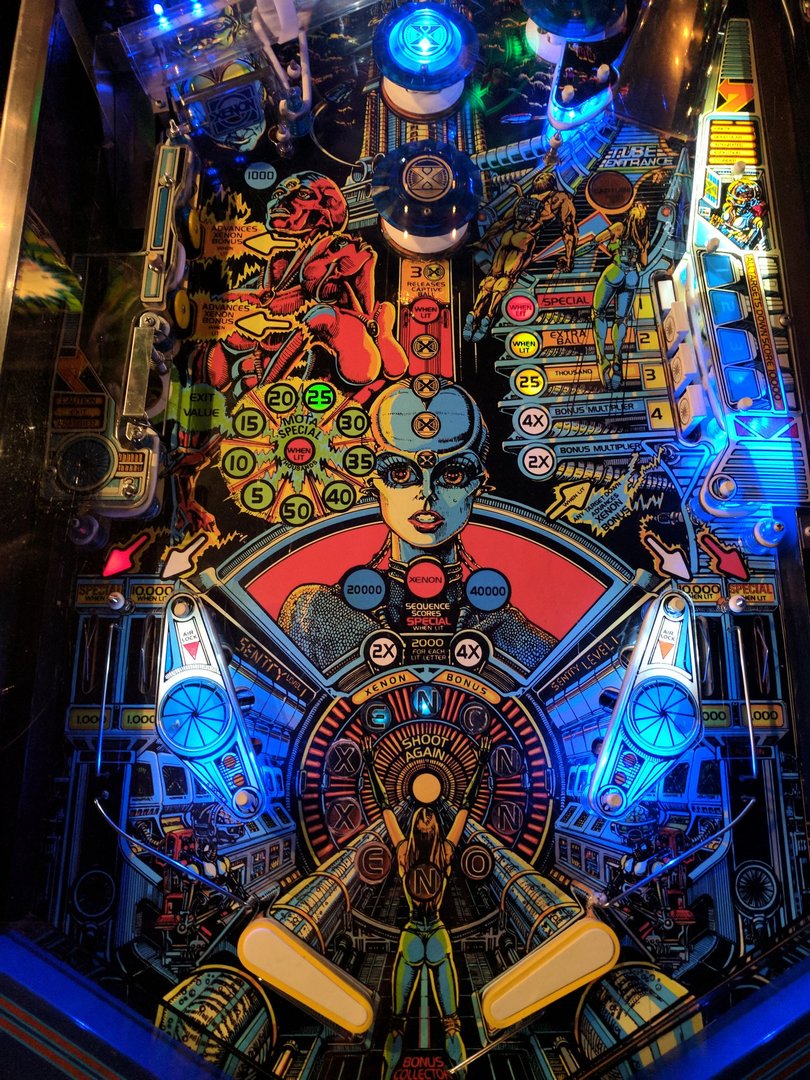

Adaptive music system and sample playback, designed by Suzanne Ciani.

Games from the 1980s with horizontal re-sequencing tended to do hard cuts between music parts, completely ignoring key and rhythm.

An early example of this approach is the Pinball table Xenon (Bally, 1980). The pinball sound board has a General Instrument AY-3-8910 PSG sound chip and a Motorola 6802 CPU, and 7 ROM chips. The PSG is mainly used for sound effects, and I'm assuming that the MC6802 is doing sample playback from ROM using a D/A converter.5

The table switches between different music loops and voice samples, and seems capable of only playing a single sampled sound at a time. The sound for Xenon was by designed and composed by Suzanne Ciani.6 The resulting experience is very fragmented and chaotic, which could be argued fits pretty well to the chaotic nature of pinball games.

Aside from this table being a very early example of a pinball table with music, it's also unique for using voice samples, something that wasn't seen much before 1980 in any games (Stratovox (1980) and Berzerk (1980) being the earliest examples I encountered). Ciani chose to use vocal grunts and breath sounds to respond to hitting targets, making it feel like the machine was alive.

Many pinball tables in the first part of the 1980s didn't have music at all, mostly focusing on otherworldly sound effects. Williams pinball machines of that era often used Eugene Jarvis' G-sound software synthesizer-based system, which is discussed later.

Later in the 1980s, the horizontal approach to adaptive music with hard cuts became standard, and has been used ever since. Tables such as Cyclone (Willams, 1988) used adaptive music with FM sound with sampled sound effects layered on top. The music cuts between different segments, based on changes in game state and targets hit by the ball.

Video Games

Horizontal re-sequencing with hard cuts.

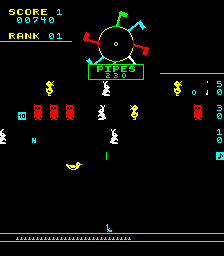

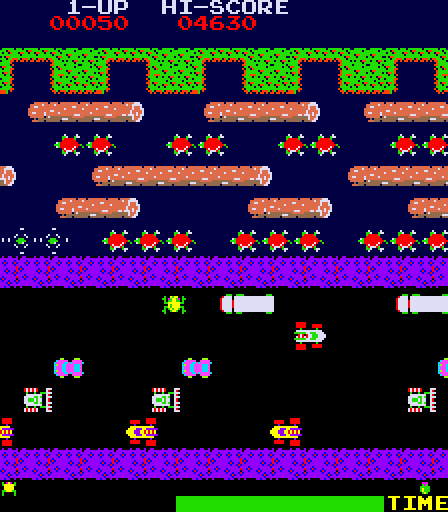

In arcade video games, horizontal re-sequencing was also used. An early example is Konami's Frogger (1981), that switches between many musical parts based on your progress.7

Nintendo's influential Super Mario Bros. (1985) switches Koji Kondo's music parts based on game states. The normal state music gets interrupted if you pick up a star and get invincible, when you go underground, mysterious music plays, and the music cuts to a fanfare when you reach the end of a level.

The inspiration from pinball machines also arrived in home video games, such as Pinball Dreams (1992) by Digital Illusions (also known as DICE, the creators of the Battlefield games). In this pinball simulator, tracker-based music changes instantly based on game state, creating a very dynamic and responsive soundtrack, exactly like real pinball tables.

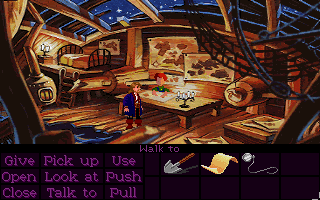

The iMuse system revolutionized adaptive game music.

Lucasfilm Games refined horizontal re-sequencing with Monkey Island 2 (DOS) by creating iMuse, a system for hand-written transitions between different music parts 8 9 10. This approach to adaptive music has been the norm for most large video game productions in the 2010s-2020s. However, iMuse was designed for a MIDI score, but modern game music is almost exclusively composed using audio stems. Modern audio middleware solutions such as Wwise or FMOD, implement the same basic ideas for adaptive music as iMuse, but mainly for audio stems.

Horizontal re-sequencing can supplemented by adding 'stingers' on top of the re-sequenced stems. Stingers can be used to have instant or near-instant musical reactions to the game state, without interrupting the general flow of the music, which might prevent quick transitions. A classic example of stingers is the horror movie trope 'Scare chords' where shocking events are accompanied by sudden orchestral stabs. Dead Space uses this technique when enemies appear on the screen for the first time. Condemned 2: Bloodshot used a similar technique during certain fights, where the fight sound effects would be replaced by musical stingers.

Game List

Examples of games using horizontal re-sequencing:

| Game | Year | Type |

|---|---|---|

| Xenon | 1980 | hard |

| Frogger | 1981 | hard |

| Cyclone | 1988 | hard |

| Pinball Dreams | 1992 | hard |

| Monkey Island 2 | 1991 | transitions |

| Metal Gear Solid | 1998 | hard/transitions |

| 140 | 2013 | game state/location |

Vertical remixing/re-orchestration

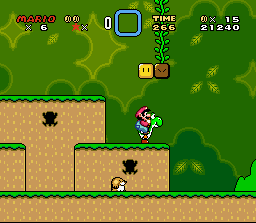

Simple vertical remixing: adding bongos when on Yoshi

Vertical remixing is dynamically changing a piece’s instrumentation by attenuating musical layers based on gameplay state.

An simple example of this is the bongo track added in Super Mario World (1990) when you are riding a Yoshi. The musical piece continues playing without interruption, but the bongo track is muted depending on whether you're riding Yoshi or not.

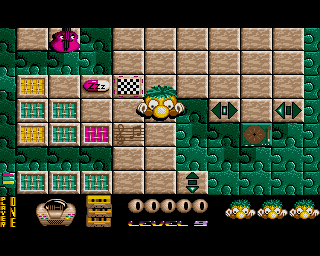

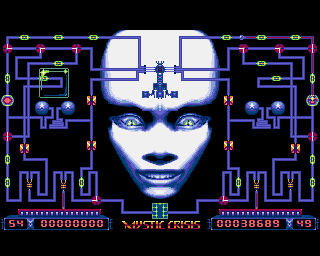

Music puzzle game with vertical remixing

The same year, Amiga game Jumping Jack'Son used vertical remixing as part of the game mechanics. It's a top-down puzzle game where you control a jumping weirdo with the goal of placing LPs on record players. Every time, you place an LP, a new music track is added to the mix. This example of vertical remixing is fundamentally different to Super Mario World, as the basic game mechanics are directly represented in the music. The game has music by Stéphane Picq.

A more sophisticated example can be seen in Grant Kirkhope's score for Banjo-Kazooie (1998), where several note-based tracks are faded in or out depending on your location in the world.11

Austin Wintory's score for The Pathless uses vertical remixing based on character movement speed, adding excitement to the soundtrack as the player gets into a flow of sliding through the world. The game has bosses that roam the world, and each boss is associated with a particular instrument in the soundtrack. When a boss is defeated, the instrument associated with that boss is permanently removed from the score.12

Game List

Games that use vertical remixing:

| Game | Year | Type |

|---|---|---|

| Super Mario World | 1990 | game state |

| Banjo-Kazooie | 1998 | location |

| Metal Gear Solid | 1998 | game state |

| 140 | 2013 | game state/location |

Hybrid Approaches

State-based hybrid adaptive music for stealth gameplay

Combining horizontal re-sequencing and vertical remixing ends up being a powerful way of creating adaptive music. Metal Gear Solid (1998) is an early example of this approach.

Similarly to Monkey Island 2, the music for Metal Gear Solid is note-based. When the stealth game state (Normal, Alert, Evasion) suddenly changes, the music performs a hard switch to a different music part. However, other changes are slower and predictable, which enabled smooth transitions by fading out a subset of the 12 music tracks (vertical remixing).

- Normal: The starting state, calm background music.

- Alert: When Snake is spotted, the famous alert sound plays (a diminished arpeggio chord), and the music immediately switches to an Alert state, which is faster and in a different key.

- Evasion: When hidden for a while, the game state changes to 'Evasion', some music tracks starts fading out, and the tempo gradually slows down.

- Normal: When hidden for a longer while, the game state changes to 'Normal', and there is a hard switch to the calm background music.

Here is a video demonstration with a debug view of the individual audio channels:

You will notice that going to the alert state is immediate, which calls for a hard music switch, which is masked by the alert sound. However, switching 'Evasion' state and later 'Normal' state again always takes time, which enables a smooth transition, which in this case uses fading and tempo change.13

Austin Wintory's score for Journey (2012) primarily uses horizontal re-sequencing, as every area has a piece of music that starts when the area is reached. When another player joins and their character is close to your character, harp and viola parts are added on top, an example of vertical remixing.14

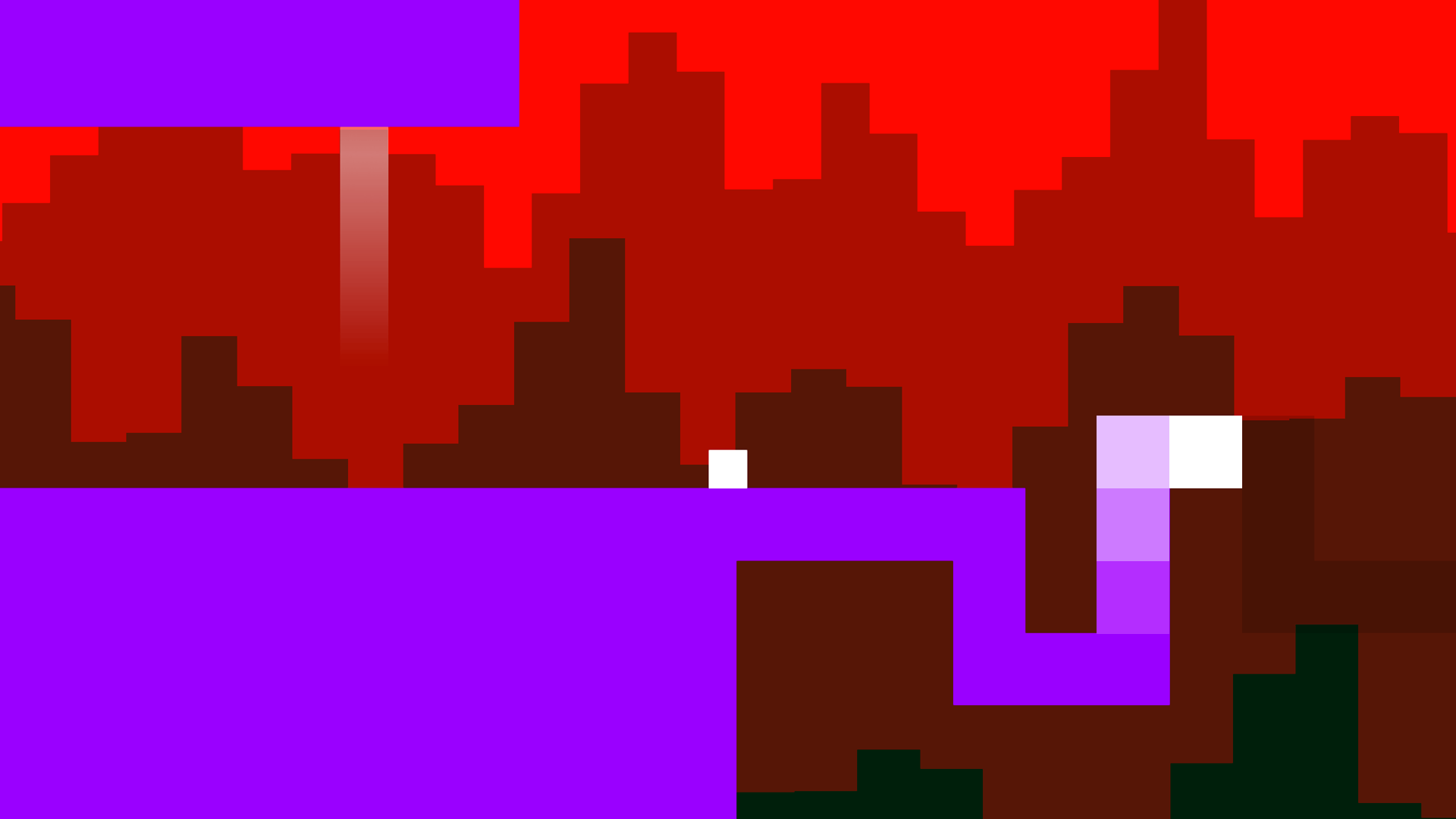

Combines horizontal re-sequencing and vertical remixing.

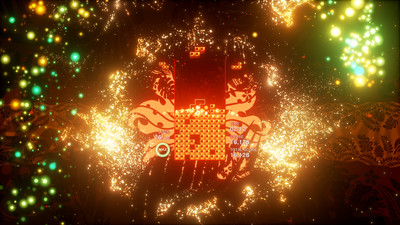

Jakob Schmid's score for music platformer 140 (2013) uses vertical remixing, where looping music tracks are attenuated, muted/unmuted, as well as filtered based on gameplay progress. For the 4th boss fight, 20 loops are running simultaneously, most of them muted, and then dynamically attenuated, unmuted, and filtered depending on gameplay state.

However, whenever a 'key' is delivered, all loops are stopped and a new music part starts with all new loops. Thus, the game uses both horizontal re-sequencing and vertical remixing.15

Generative Music

Generative music is created by a system rather than manually by a composer.

Music can be generated at the level of individual notes, or at the level of software synthesis of the musical sounds themselves.

Note-generative music

Note-generative music is the more common approach to generative game music, where individual notes are generated at runtime, in the form of MIDI or an equivalent protocol that take the form of to notes and parameter updates.

The generated notes can be played back by hardware synthesizers such as the Commodore 64 'SID' chip, or be used to trigger a hardware sampler such as the Commodore Amiga 'Paula' chip, or a software sampler implemented in audio middleware or game engine audio systems.

A subset of note-generative music generate notes directly from player input, which is referred to as 'interactive' music.10 More on this later.

Uses PureData to generate music in creature editor.

A more subtle approach is note generation based on game state instead of player input. One of the most famous examples is Kent Jolly and Brian Eno using PureData for editor music in Spore (2008). Spore mostly uses note generation to trigger samples.16

Nintendo DS music toy Electroplankton (2005) enabled the player to set up generative music systems based on 2D physics and other mechanisms.

Other examples of this technique is seem in Mini Metro (2016), Uurnog (2017), Rytmos (2023), Everyday Shooter, and ODDADA (2024).

Note generation is often used for pitched instruments, but can very effectively be used for percussion or other non-pitched instruments as well. Rise of the Tomb Raider has a 'Dynamic Percussion System' developed by Daniel Brown that has a note-based subtractive approach where percussion hits are removed based 'energy' level of gameplay and other game parameters.17 18 19

Although these games clearly show examples of generative music, the distinction between note-generative and adaptive sequencing is somewhat subtle.

We could imagine adaptive sequencing that is so fine-grained that almost every note is selected based on game state. In that case, would we call it generative? Perhaps.

Game List

Examples of games with note-based generative music:

| Game | Year | Type | Synthesis |

|---|---|---|---|

| Ballblazer | 1985 | randomized | HW synth |

| Otocky | 1986 | interactive, adaptive | HW synth (Famicom) |

| Extase | 1990 | interactive | HW sampler (Amiga) |

| Rez | 2001 | interactive | HW sampler (PS2) |

| Spore | 2008 | adaptive | SW sampler (PureData) |

| Rise of the Tomb Raider | 2015 | adaptive | SW sampler |

| Mini Metro | 2016 | adaptive | SW sampler |

| Killer Instinct | 2018 | interactive | SW sampler |

| Tetris Effect | 2018 | interactive | SW sampler |

| Ape Out | 2019 | adaptive | SW sampler |

| Ynglet | 2021 | adaptive | SW sampler |

- abbreviations: HW - hardware, SW - software

Realtime music synthesis

A less common approch is to generate music at runtime with a software synthesizer.

Tim Follin would write software synthesized music on the ZX Spectrum, referred to as 1-bit music, because it was generated by rapidly turning a 'Beeper' (tone generator with no volume control) on and off, acting as a 1-bit D/A converter.

Using this technique, Follin could do 5-voice polyphony on a 3.5 MHz machine20.

Here are a few examples of Tim Follin's 1-bit music on the ZX Spectrum:

- Agent X (1985)

- Vectron (1985)

- Chronos (1987)

Peggle Blast (2014) used Wwise built-in tone generators, and the plugin SoundSeed Air to generate sound as a space-saving measure to use less than 5 MB disk space for all audio. It uses Wwise built-in features to generate sound effects and music at runtime21.

The in-world synthesizers of Fract.OSC (2014) are implemented using PureData and generate music in realtime.22 Another example of PureData in games is Leonard J. Paul's 'Sim Cell'.23

Generates ambient music using real-time synthesis.

A suite of custom software synthesizers generates all the ambient background music for Cocoon (2023). The synthesizers spanned different types of synthesis, including subtractive synthesis, frequency modulation synthesis, and granular synthesis. They were implemented in C++ as plugins for audio middleware FMOD, and controlled from game parameters.24

Games that use specialized audio hardware such as the SID chip to generate musical sounds shouldn't be counted as real-time generative music. The key observation is that the sound generation is designed and implemented by the hardware manufacturer, not by the composer or programmer working on the game.

In most cases, this distinction is clear. Super Mario Bros. doesn't implement real-time generative music, because it sends predefined sequences of note events to the NES audio processing unit (APU). It is adaptive, because the selection of note events changes with game state, but it is not real-time generative, because the sound is generated by the normal function of the APU.

This distinction can get quite subtle. Consider a game that randomly generates values that are sent to the sound registers on the Sega Geneis. Here, the timbre will change randomly with the values sent to the sound registers. Is this real-time generated audio? Possibly.

Game List

Examples of games with realtime synthesized music:

| Game | Year | Type |

|---|---|---|

| Agent X | 1985 | non-adaptive |

| Vectron | 1985 | non-adaptive |

| Chronos | 1987 | non-adaptive |

| Peggle Blast | 2014 | adaptive |

| Fract.OSC | 2014 | adaptive |

| Cocoon | 2023 | adaptive |

Adaptive Generative music

Generative game music is often adaptive, meaning that the music reacts to changes in game state.

This can be achieved by modifying notes played by either removing notes or modifying them, as well as by changing the timbre of instruments. In many respects, making generative game music adaptive is easier than making linear game music adaptive, and it is often explained as the reason for making the music generative in the first place.

An early example is Otocky (1987) that generates musical notes when using attacks. Picking up different instruments correspond to picking up weapons. The sound changes, along with the attack pattern.

Other games focus on representing the current state of the game musically, such as Spore (2008) where the generated music in the creature editor has different scales depending on which type of creature you're creating (e.g. minor for predators, major for herbivores).16

Generates notes from state of Metro Map.

Similarly, Rich Vreeland's generative soundtrack for Mini Metro (2016) generates notes directly from the metro map and the state of the trains. It uses a Unity plugin named G-Audio to play samples scheduled on beats.25

Cocoon (2023) generates notes and changes timbre as a result of game state, exemplified in the 2nd boss fight, based on cloak state and movement speed.

Ape Out (2019) generates a drum solo based very closely on play actions. A particularly interesting feature is that killing enemies result in cymbal hits, and where the enemy is on the display when they are killed, selects a cymbal corresponding to a similar location on a drumkit.26

Ynglet

One of the best examples of highly adaptive generative music is unique platformer Ynglet (2021) by prolific indie developer Nicklas Nygren. Seemingly, every single musical element is generative, and instrumentation, density of notes, and key is tied intimately to the level progression and your actions as a player.

Ondskan - music system used in Uurnog and Ynglet.

Interactive music

Musical samples triggered by user actions on top of backing track.

Interactive music is generative music that is generated directly from player input. This gives the player the feeling of directly playing the music with their hands.

Otocky (1987) was an early example of interactive music as well as adaptive music. Musical notes were generated directly from players pressing the attack button.

In Amiga game Extase (1990), musical samples are triggered by player actions on top of a backing track. Extase has sound design by Jumping Jack'Son composer Stéphane Picq.

Interactive music, player input directly triggering musical elements.

A group of games further developed using player input to generate musical sounds synchronized with a backing track. In Rez (2001), enemy hits trigger drum machine sounds added to the music mix. Similarly, in Tetris Effect (2018), direct controller input is quantized and then used to trigger samples on top of a backing track.

A different take on this idea is Killer Instinct, where every hit in an ultra combo plays a musical note, making succesful combo execution extra fun for the players by adding music interactivity.27

Non-adaptive, Non-interactive Generative music

Music or samples can be generated at runtime as a space-saving measure. This was rarely used in games, but was used for cracker intros.

The early LucasFilm Games sci-fi ball game Ballblazer (1985) generates non-repeating title screen music based on patterns that can be randomly selected and sped up. It doesn't adapt to game state at all, so it's non-adaptive.

Tim Follin's impressive 1-bit music for the ZX Spectrum is deterministic and thus non-adaptive.

Synchronized Scoring

Synchronized scoring.

Closely related to the topic of adaptive music is the topic of synchronizing music to game events and vice versa.

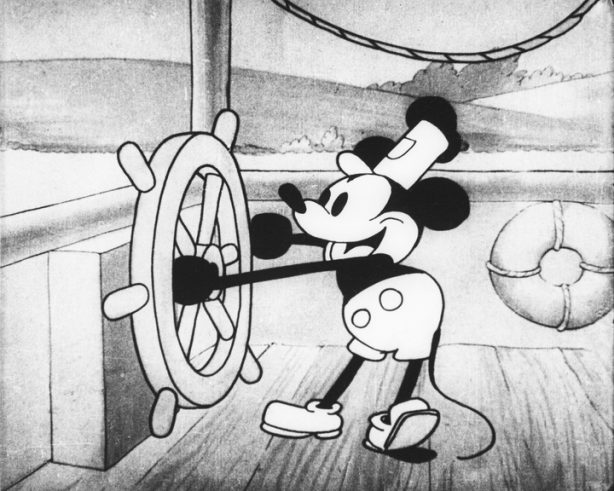

In film, 'synchronized scoring' refers to scenes where music is synchronized with the action. If the synchronization is very tight, as in Disney's classic Mickey Mouse cartoon 'Steamboat Willie' from 1928, it is referred to as 'Mickey Mousing'.28

The two approaches to creating such scenes are:

- syncing music to picture: The music is composed or edited to synchronized with an already recorded/animated film.

- syncing picture to music: The music is composed first, and the film is animated or edited to synchronize with the music. This was used in Disney's 'Fantasia', where animations were coreographed to fit classical music.

In games, the two techniques require very different technology.

Syncing music to picture

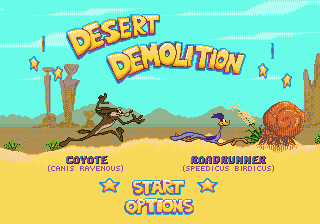

Extreeme Mickey Mousing

Music can be synced to animations by triggering music based on animation events in a similar fashion to how Foley sound effects are triggered. A famous example of this technique can be found in Dig Dug (1982), and an even more detailed implementation can be found in 'Desert Demolition Starring Road Runner and Wile E. Coyote' (Genesis 1995).

It seems like composing and implementing music that tightly synchronizes to animations is very difficult,which probably had a large impact on their usage.

Syncing picture to music (not adaptive music)

Synchronizes visuals to music.

Syncing picture to music in video games can be performed by adding metadata in the form of cue points to the music which then is used to trigger animations when they are reached.

Please note that this is not a form of adaptive music, but rather adaptive animation.

Rhythm games like Vib-Ribbon, Guitar Hero, the Rhythm Heaven series, Beat Saber, and Thumper all synchronize visuals to music in order to represent music-based gameplay in a visually unambigious way.

In 140 (2013), the gameplay adapts directly to the music, by moving level elements in time with rhythmic elements. At the same time, the music adapts to player progress using horizontal re-sequencing and vertical remixing.

Hi-Fi Rush (2023) also adapts the gameplay to the music, but in a fully 3D environment. The game also has rhythm game elements, such as attacking 'on the beat'.

Rayman Legends (2013) has music-based levels where animations are synchronized to a song that plays linearly. Super Mario Bros. Wonder (2023) has similar levels with musical elements such as singing enemies.

Summary

Taxonomy of adaptive and generative music:

Adaptive Music (aka 'dynamic music')

Music that changes as a result of a change in game state.

|

+- Vertical Remixing

| Dynamically changing instrumentation based on game state.

| e.g.: Super Mario World Yoshi bongos

|

+- Horizontal Re-sequencing

| Music part changes based on game state.

| |

| +- Hard resequencing

| | e.g.: Xenon music switch

| |

| +- Resequencing with transitions

| e.g.: Monkey Island 2

|

+- Synchronized Scoring

|

+- Syncing Music to Picture

Also known as 'Mickey Mousing'

e.g.: Dig Dug

Generative Music

Music that is generated at runtime.

Can be adaptive or non-adaptive.

|

+- Interactive music

| Music is generated directly from player input

| e.g.: Tetris Effect

|

+- Note-generative music

| Individual notes are generated, e.g. MIDI messages

| e.g.: Spore

|

+- Realtime music synthesis

e.g.: Chronos (Tim Follin)

Changelog

- 2025-12-11 Split history of game sound into its own article

- 2025-02-16 Edited history section

- 2025-02-08 Added history section about sound and music in early games, recorded and synthesized sounds.

- 2025-02-05 Added section about sound and music in early games, and Space Invaders calculations as footnotes.

- 2024-12-16 Added details about Xenon and other games, edited structure

- 2024-12-12 Added figures

- 2024-11-04 Collecting notes about adaptive and generative music

- 2024-12-02 Extracted Generative music article from tag description

- 2024-12-04 Joined articles about adaptive and generative music

References

-

Game On!: Video Game History from Pong and Pac-Man to Mario, Minecraft, and More

Dustin Hansen

MacMillan Publishing Group

ISBN 978-1-250-08095-0 ↩ -

MiSTer Arcade: Space Invaders, Radar Scope, Mars

channel: syltefar ↩ -

This increasing speed is a side effect of the way the enemies are updated on screen. The update is implemented as a round robin algorithm, meaning that just one single alien is updated every video frame. The Space Invaders display updates at 60 frames per second 29, which makes it look relatively smooth. Initially, each alien moves every 55 frames, which in 60 FPS corresponds to around 0.9 seconds. ↩

alien_count = 11 * 5 = 55 55 frames/update alien_update_period = ---------------- ~= 0.92 s/update 60 frames/s -

The Space Invaders music tempo can be verified by recording a gameplay session and measuring the time between each note. Initially, there is around 870 ms between each note, and at its fastest, there is around 87 ms between each note: ↩

tempo = (1 beat / period s) * 60 s/min = 60 beat*s/min / period s = 60 / period beat/min music_tempo_slow = 60 / 0.870 beat/min ~= 69 BPM music_tempo_fast = 60 / 0.087 beat/min ~= 690 BPM -

Pinball Manual: Xenon, by Bally

Bally Pinball Division (1980)

Internet Archive ↩ -

Wikipedia: Suzanne Ciani

Various authors

Wikipedia ↩ -

Introduction to Adaptive Music in Games

Simon Hutchinson (2021)

series: Listening to Videogames ↩ -

Clint Bajakian and Michael Land from LucasArts games interview

Clint Bajakian, Michael Land (2019, LucasArts)

channel: Ehtonal Canada, interview for Beep movie ↩ -

Beep: A Documentary History of Game Sound

Karen Collins (2016) ↩ -

Game Sound: An Introduction to the History, Theory, and Practice [..]

Karen Collins (2008, MIT)

ISBN: 978-0-262-03378-7 ↩ -

How Did They Do That - Banjo-Kazooie's Dynamic Music

Rob Wass (2015)

channel: ClassicGameJunkie

series: How Did They Do That ↩ -

Why 'No Music' was the goal in THE PATHLESS

Austin Wintory

channel: Austin Wintory ↩ -

Realtime Synthesis and Dynamic Music in Games

Jakob Schmid (2023)

Slides for Sonic College talk ↩ -

FROM JOURNEY TO ERICA - A look at Interactive music systems

Austin Wintory

channel: Digital Dragons ↩ -

Procedural Music in SPORE

Kent Jolly, Aarn McLeran (2008)

Talk from GDC Vault ↩ -

Dynamic Percussion System

Intelligent Music Systems

YouTube video ↩ -

Papers by Daniel Brown

Author of the Dynamic Percussion System ↩ -

Real-time Procedural Percussion Scoring in 'Tomb Raider's' Stealth Combat

Philip Lamperski, Bobby Tahouri (2016) ↩ -

What is 1-bit-music?

Nikita Braguinski and 'utz'

Interview from ludomusicology.org ↩ -

Peggle Blast: Big Concepts, Small Project

R. J. Mattingly, Jaclyn Shumate, Guy Whitmore (2017)

Talk from GDC Vault ↩ -

FRACT, 3D Adventure Game Played with Synths and Sequencers

Peter Kirn (2012)

Blog post on cdm.link ↩ -

The Generative Music and Procedural Sound Design of Sim Cell

Leonard J. Paul (2013)

YouTube channel: School of Video Game Audio ↩ -

Merging Audio and Gameplay in COCOON with Jakob Schmid

Austin Wintory (2023)

Youtube channel: Academy of Interactive Arts & Sciences ↩ -

Mini Metro - A Musical Subway (System)

Rich Vreeland (2017)

Talk from YouTube channel: Disasterpeace ↩ -

Algorithms, apes and improv: the new world of reactive game music

Will Betts (2019) ↩ -

Every Ultimate Finisher All Ultra Combos Killer Instinct

Channel: AcidGlow ↩ -

Wikipedia: Mickey Mousing

Various authors

Wikipedia ↩ -

Computer Archeology: Space Invaders

Christopher Cantrell ↩